Organizations are investing heavily in artificial intelligence, yet many projects struggle to move beyond the proof-of-concept (POC) stage. AI implementers and project leaders often face significant hurdles when transitioning from experimental validation to practical, scalable deployment. This article examines the most common pitfalls that hinder AI projects from reaching production and shares insights supported by industry reports and studies.

Many AI projects begin without clear, measurable goals. Without precise exit criteria, stakeholders struggle to agree on whether the project is successful or ready for deployment.

Excerpt (Gartner via Informatica blog): “Only 48% of AI projects make it into production, and it takes 8 months to go from prototype to production… at least 30% of generative AI (GenAI) projects will be abandoned after proof of concept by the end of 2025, due to poor data quality, inadequate risk controls or unclear business value.” (Informatica)

Pitfall 1: Unclear Success Criteria

.png)

Many AI projects begin without clear, measurable goals. Without precise exit criteria, stakeholders struggle to agree on whether the project is successful or ready for deployment.

Excerpt (Gartner via Informatica blog): “Only 48% of AI projects make it into production, and it takes 8 months to go from prototype to production… at least 30% of generative AI (GenAI) projects will be abandoned after proof of concept by the end of 2025, due to poor data quality, inadequate risk controls or unclear business value.” (Informatica)

How to Avoid: Establish Clear Success Metrics

Define precise KPIs and exit criteria early in the POC stage. In the latest enterprise case study we conducted, the client agreed to our recommended accuracy rate in our eval runs while requiring our team to achieve a user-acceptable latency before going ahead with production deployment.

Pitfall 2: Ignoring Production Readiness from the Start

.png)

AI experiments often overlook the technical realities of production environments, such as scalability, latency, and integration complexity.

Excerpt (IDC via NetApp): IDC research highlights infrastructural and scalability gaps as central barriers, with fewer than 15% of AI pilots reaching production due to insufficient infrastructure. (NetApp)

How to avoid: Prioritize Production Readiness

Plan for scalability, integration, and performance optimization from the outset. During the scoping or customer development phase, the AI product and DevOps teams should clearly understand the expected ticket volume, enabling them to anticipate potential token usage tier limits and plan accordingly.

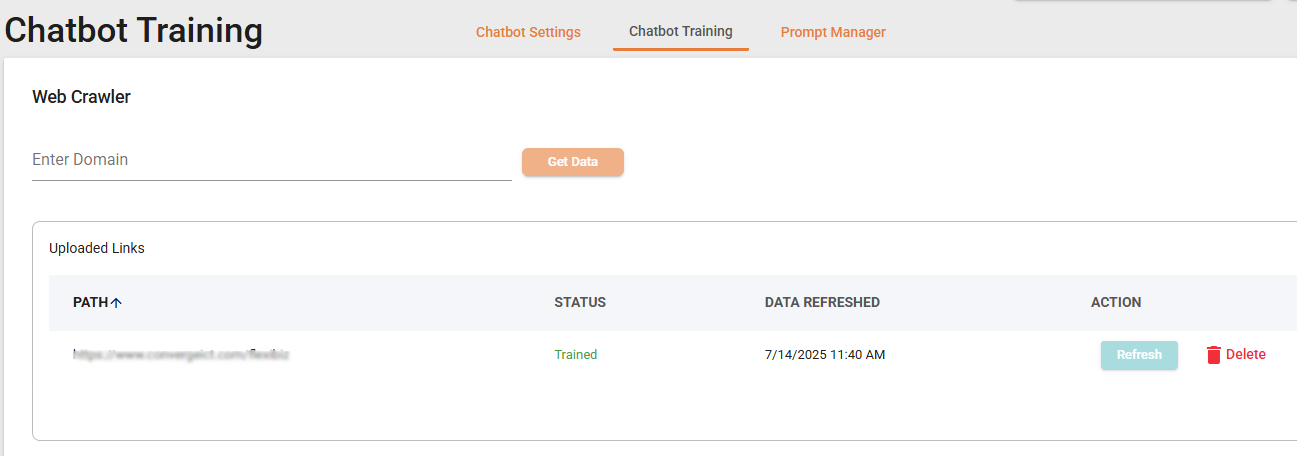

Pitfall 3: Insufficient Data Strategy

.png)

AI solutions are highly data-dependent. Many POCs underestimate the data quality, availability, and governance required to sustain long-term operational success.

Excerpt (Deloitte via WSJ CIO): Deloitte reports that 55% of organizations cite insufficient data quality, governance, and privacy compliance as key reasons their AI projects never reach production. (WSJ CIO)

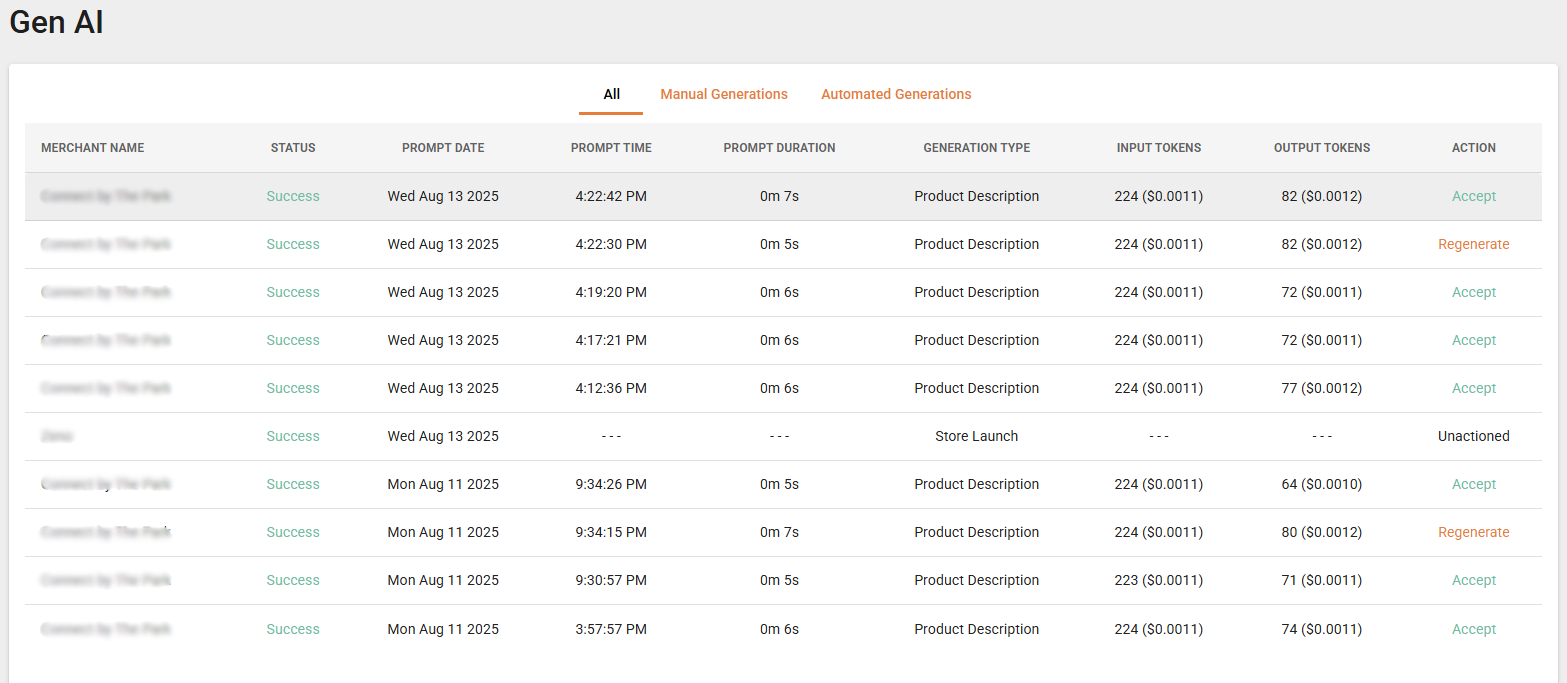

How to Avoid: Develop Robust Data Strategies

Ensure data quality, governance, and scalability are central to your project. The path from having raw data to vector embedding format should be clear and concise. Use representative production-like datasets and continuous monitoring tools such as an Eval framework to validate accuracy, latency, and readiness before deployment.

Pitfall 4: Underestimating User Adoption and Change Management

.png)

Even technically sound AI projects can falter if end-users are reluctant to adopt new technologies. Change management often receives too little attention during the POC stage.

Excerpt (McKinsey via Volonte): McKinsey advises organizations should "invest twice as much in change management and adoption as in building the solution" to realize value from GenAI. (Volonte)

How to Avoid: Engage Users Early and Often

.jpg)

Avoid this pitfall by involving end-users early in the AI project, providing clear communication, training, and support to build confidence in the new system. A key part of our implementation plan is minimizing changes to existing workflows, such as integrating human-escalated chats directly into the team’s current ticketing system.

Pitfall 5: Neglecting Ethical and Compliance Issues

.png)

Failing to address potential regulatory compliance, ethical considerations, and bias in AI models early on can lead to costly adjustments or project cancellation.

Excerpt (TechRepublic): TechRepublic reports that ethical concerns, bias in AI models, and emerging regulations such as the EU AI Act have stalled numerous AI projects, emphasizing the importance of early ethical review and compliance measures. (TechRepublic)

How to Avoid: Address Ethical and Compliance Risks

.jpg)

Avoid this pitfall by embedding compliance and ethics into your AI project from the start. This means conducting bias and fairness audits during development, following relevant regulatory frameworks (like the EU AI Act, GDPR, and ISO 27001), and setting up ongoing governance processes to monitor compliance throughout the AI system’s lifecycle.

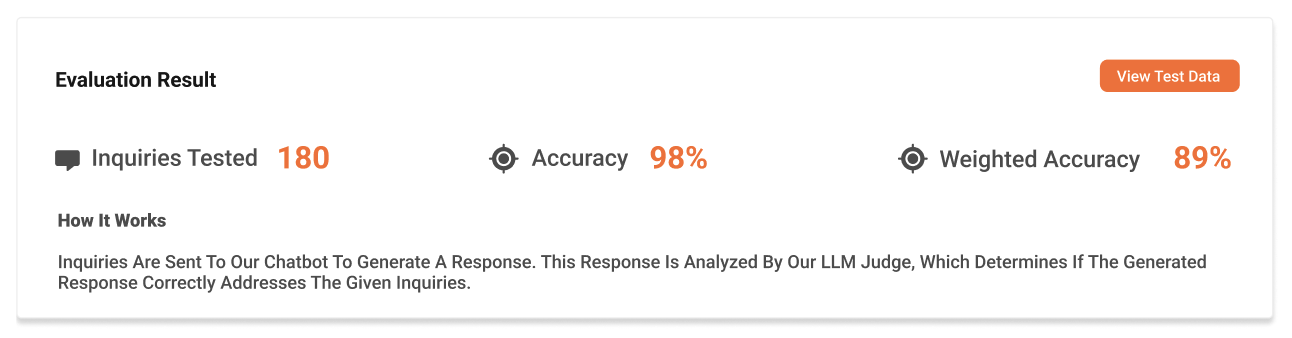

ChatGenie's Solution: ChatGenie helps enterprises avoid these pitfalls through its innovative Multi-AI Agent framework that ensures the safety, accuracy, and relevancy of AI outputs. Additionally, ChatGenie's comprehensive Eval tools enable precise measurement of key metrics such as accuracy and latency, providing confidence in transitioning from proof-of-concept to full-scale production.

.png)

Understanding and proactively addressing these common pitfalls significantly increases the likelihood of successfully moving AI projects from proof-of-concept to full-scale deployment. By adopting a strategic, practical approach supported by industry research, organizations can maximize their AI investments and deliver tangible, lasting value.

Let’s talk!

Want to see how ChatGenie can help you grow your business and deploy AI-powered Customer Support confidently?

Book a free consultation call here:

👉 https://chatgenie.ph/book-a-call

.jpg)